Facebook testing is a widely discussed method in digital marketing, yet not every advertiser knows how to effectively implement it in their ad campaigns. So, what is the optimal way to conduct Facebook testing? And what success stories have emerged from it? Let’s dive into the insights in this article by Nemi Ads.

1. What is Facebook Testing and Why Does It Work?

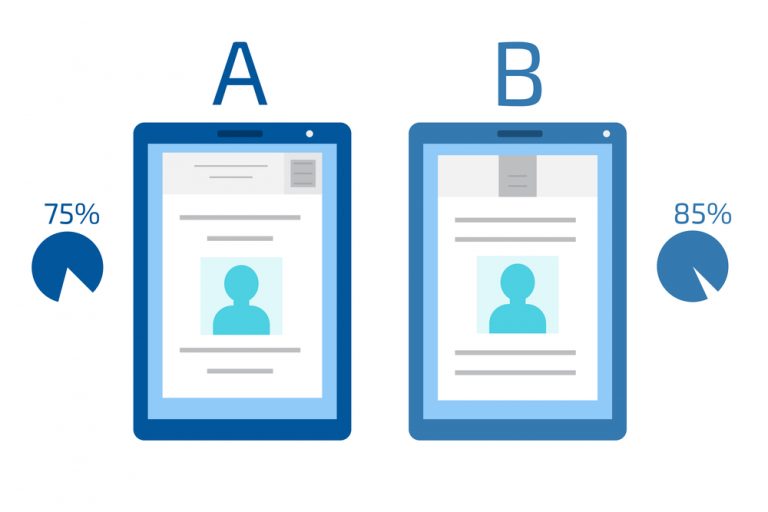

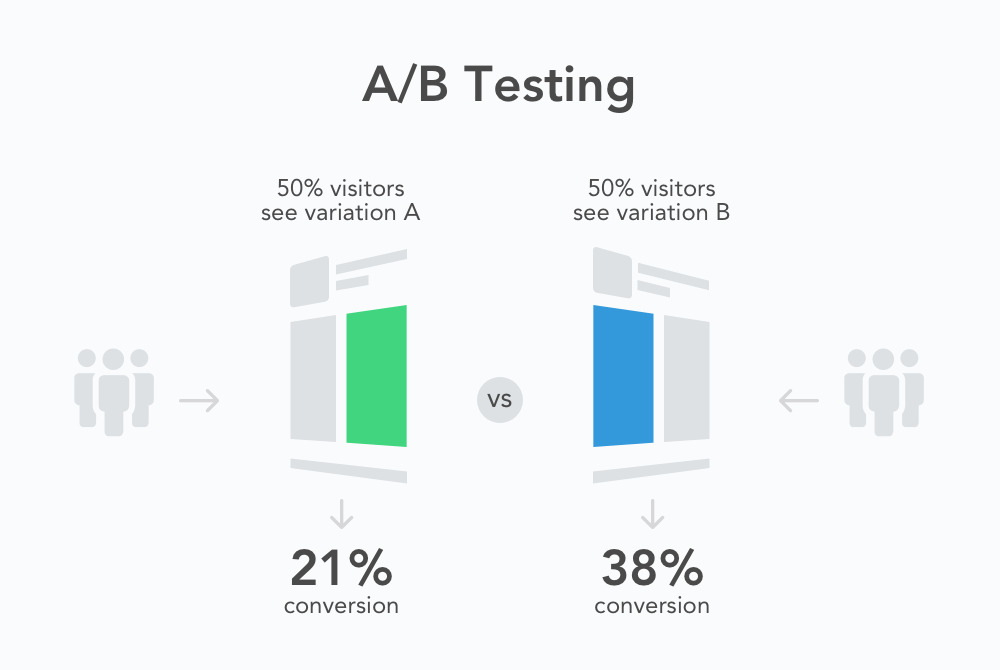

Facebook Testing is also known as A/B Testing or Split Testing. Understanding the fundamentals of Facebook Testing provides you with an overview of this method’s potential and its value in optimizing your ad campaigns.

1.1. What is Facebook Testing?

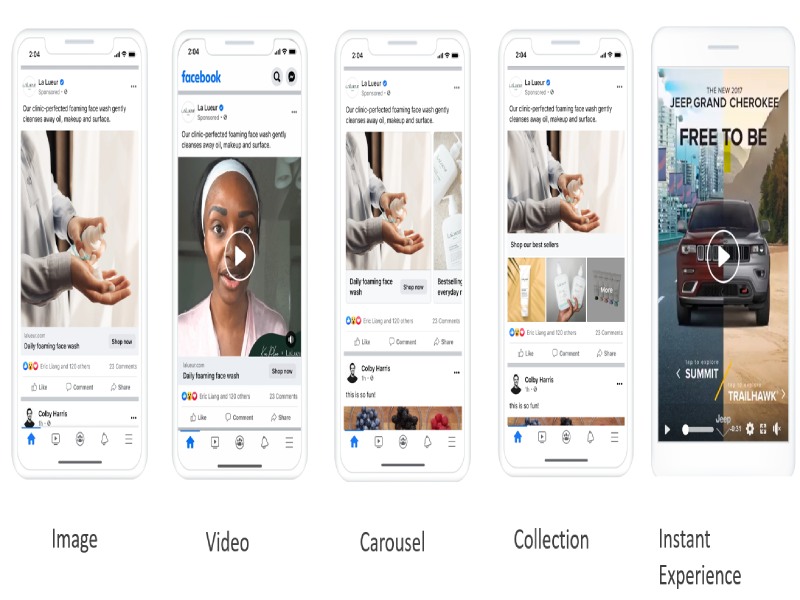

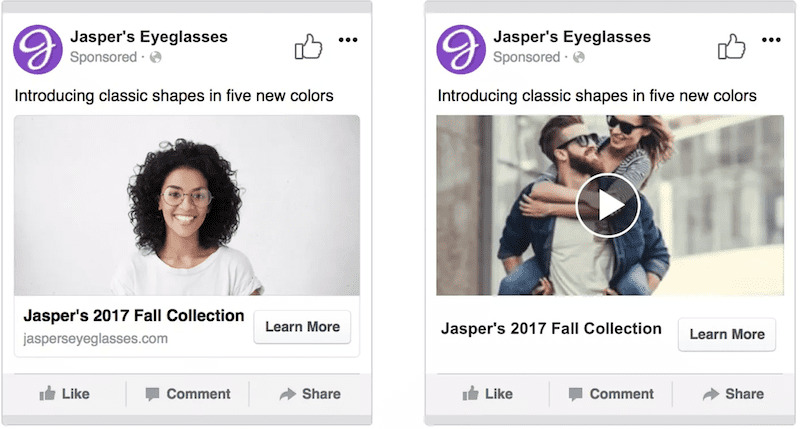

Facebook Testing is a method that allows advertisers to test two variations of an ad with different elements such as target audience, content, visuals, and ad placement. Based on the collected data, advertisers can select the most effective version for their campaign.

1.2. Why Does Facebook Testing Work?

Here’s why Facebook Testing works for ad campaigns:

- Identifying the best ad version for your audience: By comparing different ad versions, advertisers can pinpoint the most effective combination of content, visuals, and placement that resonates with their audience, leading to higher engagement and conversions.

- Improving similar campaigns: Successful variations can be applied to current or future campaigns, helping advertisers optimize their ads while saving time and effort.

- Boosting ROI: Ads that are tailored to the audience are more likely to achieve the desired conversion goals, which in turn improves return on investment (ROI).

- Optimizing ad spend: Split Testing ensures that ads are delivered to the most relevant audience, maximizing the effectiveness of the budget by generating better results at a lower cost.

2. How to do Facebook Testing in Ads Manager

Understanding the steps to conduct Split Testing is crucial for executing it smoothly and efficiently from the start. Here’s the process, recommended by NEMI Ads experts.

- Defining the Objective of Split Testing

The first step is to clearly define the goal of your test which could be increasing impressions, driving more clicks, boosting engagement, increasing website traffic or improving conversion rates.

- Selecting and Creating Ad Variations

Once you’ve set your objective, follow these steps to create ad variations:

- Step 1: Go to Ads Manager.

- Step 2: Select the ad set(s) you want to test.

- Step 3: Click on “Create A/B Test.”

- Step 4: Choose the element you want to test (audience, ad creative, placement, etc.) and follow the on-screen instructions.

- Analyzing Facebook Split Testing results:

Once the test has run, analyze the results based on real data. Key metrics to evaluate include: Click-through rate (CTR), conversion rate, return visits to the landing page or website, average time on page, bounce rate… Depending on your objective, focus on the metric that aligns with your goals to determine the best-performing ad variation.

3. Optimize your Campaign with Best Practices for Facebook Testing

Following a clear process is essential, but applying proven strategies will further optimize your campaign’s performance and set you apart from the competition. Here are the latest and most effective tips for you to implement.

3.1. Have Clear Hypothesis before Starting the Test

Before running an split test, define a clear hypothesis. Identifying what you aim to test can help save time and resources. For example, a hypothesis could be: “Using automatic bidding will lower our CPA and increase conversion rates for this campaign.”

3.2. Test only One Variable at a Time

It is important to focus on testing only one variable at a time – whether it’s the image, headline, CTA, or audience. This increases the accuracy of your results. Testing multiple variables simultaneously can cause confusion and make it difficult to pinpoint what truly impacts the campaign’s success.

3.3. Leverage Facebook Algorithm

Along with your defined target audience, take advantage of Facebook’s Advantage detailed targeting feature. This allows Facebook to learn and deliver ads to the right audience, automatically expanding or excluding groups within your defined target audience for better performance.

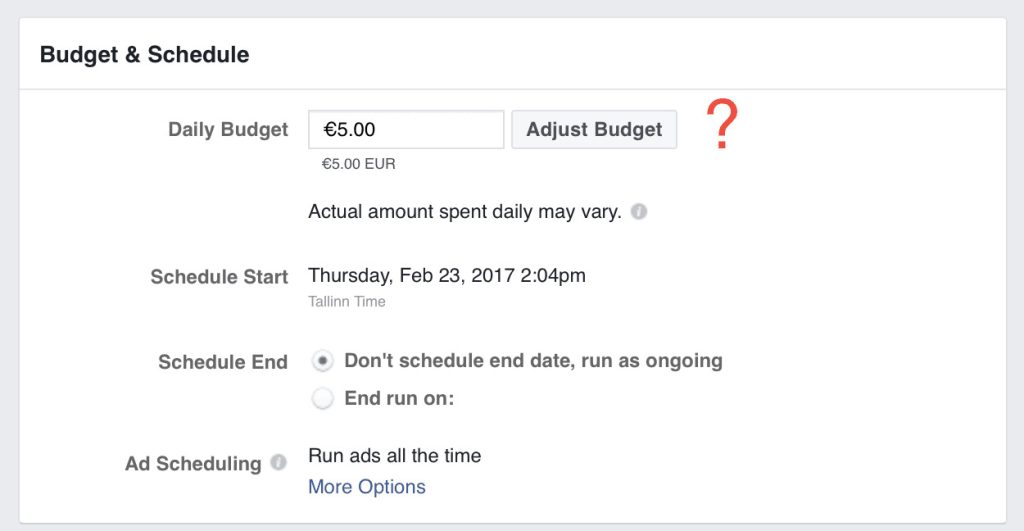

3.4. Allocate Sufficient Budget and Time

In terms of budget, you should set a clear one from the start to ensure control and avoid overspending. Flexibility in adjusting strategies is crucial to gather reliable data with optimal budget efficiency. According to LYFE Marketing, a recommended budget is $500 per month, or $16 per day.

Ideally, monitor the test for at least 7 days to observe daily behavior changes. Any adjustments during the testing phase will reset the learning process, so avoid making changes once the test is running.

3.5. Target Suitable Audiences Size for the most Accurate Data

When defining your audience, choose a target size that is neither too broad nor too narrow. A broad audience can waste budget without delivering results, while a narrow audience may miss potential leads. Ensure you choose an optimal size on the “Audiences” section on Facebook. Especially, avoid overly narrow audience setups during testing to maximize reach.

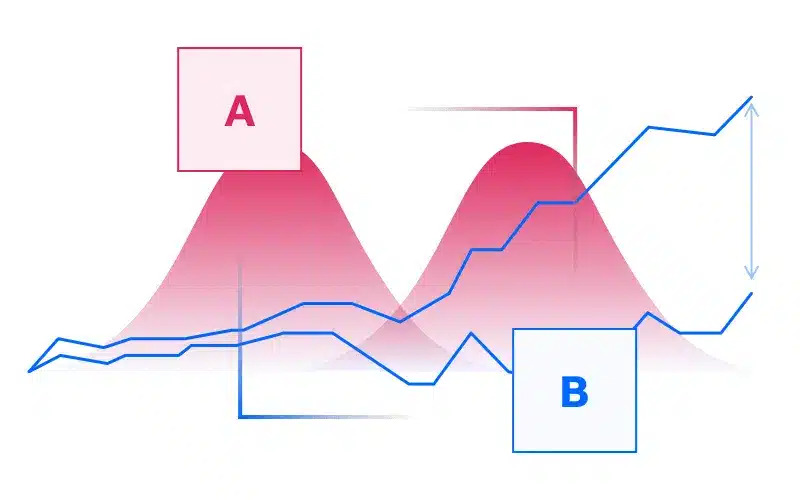

3.6. Analyze Results before Scaling

Don’t rush into scaling based on initial data. A thorough analysis ensures that the winning variation consistently outperforms others. This helps you avoid any assumptions based on early results and ensures a more informed decision when scaling.

3.7. Consider Retesting to Adjust for Changing Trends

Facebook’s algorithms and your audience’s preferences evolve over time. To maintain high campaign effectiveness, you should retest regularly and adjust based on changing trends and behaviors.

3.8. Test Different Audiences in the Best Performing Ad Placement

Once you’ve identified the best-performing ad placement, experiment with different audiences in that specific placement. This allows you to refine your strategy and maximize the performance of your ads even further.

4. 3 Successful Case Studies of Facebook Testing

Many businesses have implemented Split Testing and achieved impressive results. Below are three outstanding case studies with different goals and strategies for your reference.

4.1. Case Study 1: The Red Wagon – Boost Conversion Rate

For businesses looking to maximize conversions, precise audience targeting can make all the difference. The Red Wagon put this theory to the test with a structured A/B experiment.

- Goal: Increase conversion rate.

- Strategy: Target core audiences and those showing clear intent.

- Version A targeted a broad audience.

- Version B combined broad targeting with custom audiences and lookalike audiences. The results showed that Version B outperformed Version A.

- Outcome: The business achieved a 77% increase in purchases and reduced the cost per purchase by 57%.

Targeting the right audience is key to improving conversion rates. By combining broad targeting with custom and lookalike audiences, businesses can reach users with higher purchase intent, ultimately maximizing ad efficiency.

4.2. Case Study 2: ESL FACEIT Group – Maximize Reach

Reaching the right audience at scale is essential for any brand looking to expand its online presence. ESL FACEIT Group explored how broad targeting could enhance their ad reach and engagement.

- Goal: Expand ad reach.

- Strategy: Broaden the audience size.

- Version A used narrow targeting.

- Version B focused on a larger, more general audience, delivering better results.

- Outcome: ESL FACEIT Group successfully reduced cost per result by 59% while reaching more people likely to engage with the ad.

Beyond targeting intent-driven audiences, expanding audience size strategically can further enhance reach and engagement. A broader approach helps brands connect with new potential customers while maintaining cost efficiency.

4.3. Case Study 3: Moda in Pelle – Increase Advertising Revenue

Maximizing advertising revenue requires not only great products but also the right campaign structure. Moda in Pelle tested how automation could improve sales performance.

- Goal: Boost revenue from advertising.

- Strategy: Implement the Advantage+ shopping campaign.

- Version A was a manually configured sales campaign.

- Version B utilized the Advantage+ Shopping campaign for automation, then proved more effective than Version A.

- Outcome: Increased return on ad spend (ROAS) by 24%.

While precise targeting and reach expansion are crucial, automating campaign optimization can further improve results. Meta Advantage+ Shopping campaigns demonstrate how AI-driven solutions can boost ROAS, making ad spend more effective with minimal manual effort.

>> Need a reliable ad account for your campaigns? Rent a Facebook ad account from Nemi Ads and get expert strategies to optimize your A/B testing. Our experienced team provides tailored guidance to help you maximize performance and achieve the best results with ease.

We hope this article has provided you with a clearer understanding of how to leverage Facebook Testing to optimize your advertising campaigns. Regularly testing and identifying the best-performing ad variations not only helps increase conversion rates and boost ROI but also reduce costs. Don’t forget to follow Nemi Ads for more valuable insights and advertising strategies to elevate your campaigns!